Knowledge Graphs (KGs) have become one of the most powerful tools for modeling the relations between entities in various fields, from biotech to e-commerce, from intelligence and law enforcement to fintech. Starting from the first version proposed by Google in 2012, the capabilities of modern KGs have been employed across diverse applications, including search engines, chatbots, and recommendation systems.

There is currently a twofold perspective that characterizes KGs. The first perspective focuses on knowledge representation, in which the graph is encoded as a collection of statements formalized using the Resource Description Framework (RDF) data model. Its goal is to standardize the data publication and sharing on the Web, ensuring semantic interoperability. In the RDF domain, the core of intelligent systems is based on the reasoning performed on the semantic layer of the available statements.

The second perspective focuses on the structure (properties and relationships) of the graph. This vision is implemented in the so-called Labeled Property Graph (LPG). It emphasizes the features of the graph data, enabling new opportunities in terms of data analysis, visualization, and development of graph-powered machine learning systems to infer further information.

Before joining GraphAware, much of my experience in academia has been devoted to KGs developed using RDF and ontologies. In this article, I want to describe the opportunities in adopting the LPG to improve and expand the functionalities available in the RDF standards and technologies. To concretely analyze such opportunities, I used the Neosemantics component of Neo4j. The results will be shown using the visualization component of the Hume platform.

Exploring the Neosemantics Features

The visions behind the two perspectives on KGs have a direct impact on the user experience. In the case of RDF graphs, different types of users such as developers and data analysts interact with data serialized as statements. Therefore, any operation performed on the graph implies manipulating such statements, also when using SPARQL, the language query for RDF graphs. In LPG, the Cypher query language has a more transparent interaction with the graph structure in terms of nodes, edges, and related properties.

This subtle difference has a significant impact on user experience and performance. The redundancy of the data is strongly reduced in the case of the LPG because all the statements – or triples – representing data properties (literal values as objects) become properties of the node. As a main consequence, if in the RDF domain you have multiple statements, one for each data property, for LPG, there is a unique data structure, which is the node with its properties. Consider the following CONSTRUCT query to get all triples that include the data properties (aka the literal values) from DBpedia related to Friedrich_Nietzsche:

PREFIX dbpedia:

CONSTRUCT {

dbpedia:Friedrich_Nietzsche ?prop ?value .

}

WHERE {

dbpedia:Friedrich_Nietzsche ?prop ?value .

FILTER isLiteral(?value)

}

This query retrieves 98 statements, one for each data property. However, if we upload all these statements in Neo4j using the Neosemantic plugin, we see that only a single node has been created in the graph.

Before the data import, we can set an initial configuration for the graph database:

CALL n10s.graphconfig.set({

handleMultival: 'ARRAY',

multivalPropList : [

'http://dbpedia.org/ontology/abstract',

'http://www.w3.org/2000/01/rdf-schema#comment',

'http://www.w3.org/2000/01/rdf-schema#label']

});

This configuration setting is beneficial for information consistency. The default behavior of Neosemantic is to load only a single value for each property. However, in multilingual and large-scale KGs such as DBpedia, multiple values can be associated with a single data property. For instance, the _rdfs:label _usually includes all the labels of a specific entity, one for each language in which the entity is available on Wikipedia. After this initial configuration, we can use the Neosemantics fetch procedure to load the data into Neo4j. We start from the CONSTRUCT query introduced before.

CALL n10s.rdf.import.fetch("https://dbpedia.org/sparql","Turtle", {

headerParams: { Accept: "application/turtle"},

payload: "query=" + apoc.text.urlencode("

PREFIX dbpedia:

CONSTRUCT {

dbpedia:Friedrich_Nietzsche ?prop ?value .

}

WHERE {

dbpedia:Friedrich_Nietzsche ?prop ?value .

FILTER isLiteral(?value)

}

")

});

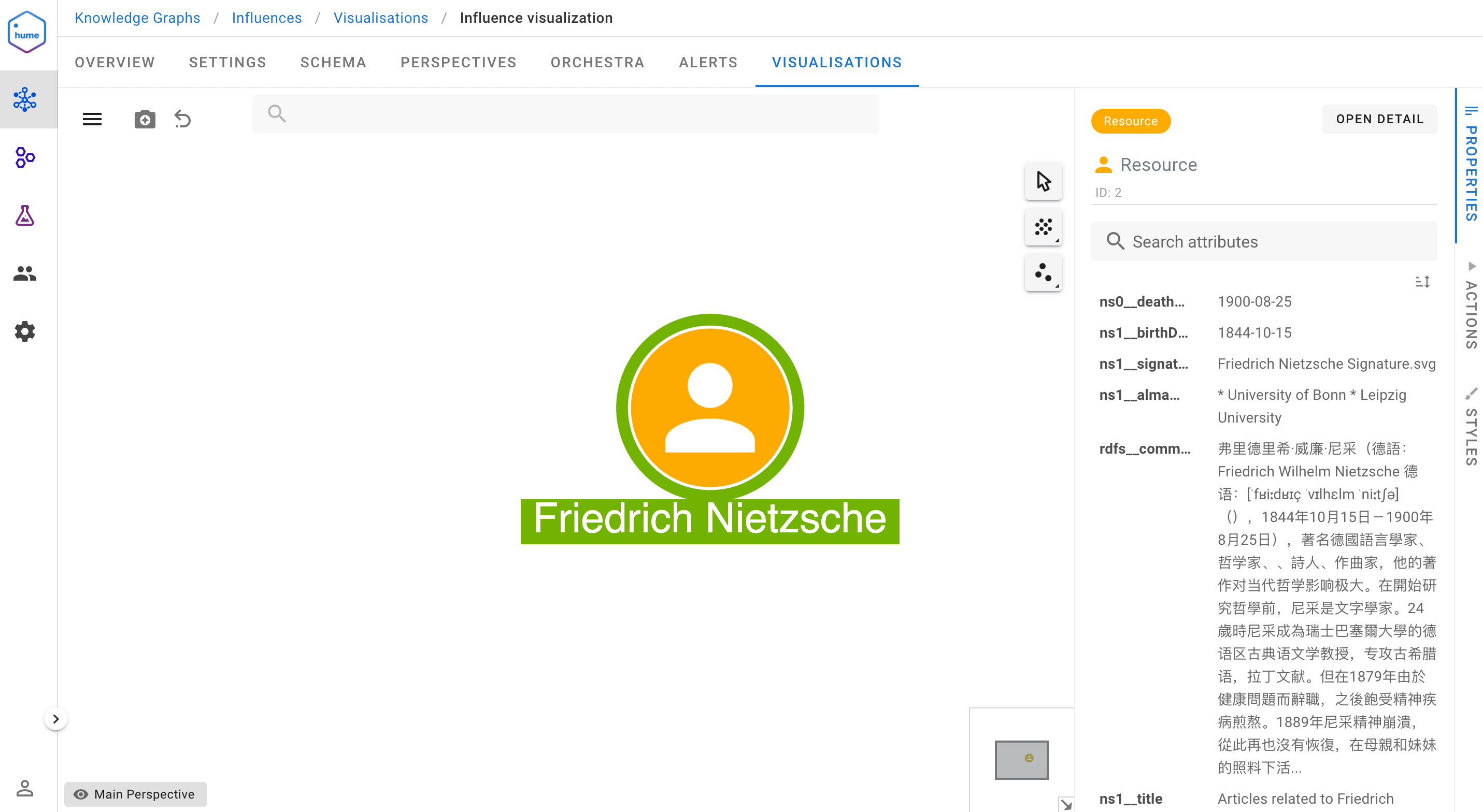

We employ the graph visualization feature of Hume to see the results:

As you can see in the Hume visualization system, a single node with the related properties is added to the canvas. As expected, the current fetching procedure added property values from different languages to maintain information consistency (in the following examples, we will see how we can apply a filter to the English language).

Analyzing the features of RDF and LPG, we notice that the redundancy reduction also emerges when comparing equivalent SPARQL and Cypher queries. To observe this difference, firstly, we define a SPARQL CONSTRUCT query that creates the ‘influence’ chain among different philosophers. We also set a configuration to keep only the English labels. The result of this query is loaded into Neo4j using the Neosemantics fetch procedure:

CALL n10s.rdf.import.fetch("https://dbpedia.org/sparql","Turtle", {

handleVocabUris: "IGNORE",

languageFilter: "en",

headerParams: { Accept: "application/turtle"},

payload: "query=" + apoc.text.urlencode("

PREFIX dbo:

PREFIX dbp:

CONSTRUCT {

?parent dbo:influenced ?child .

?parent a dbo:Philosopher .

?child a dbo:Philosopher .

?parent dbp:birthDate ?birthDate1 .

?child dbp:birthDate ?birthDate2 .

?parent rdfs:label ?label1 .

?child rdfs:label ?label2 .

}

WHERE {

?parent dbo:influenced ?child .

?parent a dbo:Philosopher .

?child a dbo:Philosopher .

?parent dbp:birthDate ?birthDate1 .

?child dbp:birthDate ?birthDate2 .

?parent rdfs:label ?label1 .

?child rdfs:label ?label2 .

}")

});

Setting the language filter proved to be particularly useful. Almost 80% of the RDF triples have been removed: 2005 loaded triples against 10000 parsed triples (10000 is the limit of the results from the DBpedia endpoint available on the Web). Then, starting from this graph of influence, we want to retrieve all philosophers at the third influence level (3-hops) above Friedrich Nietzsche, ordering by their birth dates. The related SPARQL query is the following:

PREFIX dbo:

PREFIX dbp:

PREFIX dbpedia:

SELECT DISTINCT ?child3 ?label3 ?birthDate3 {

?child3 dbo:influenced ?child2 .

?child2 dbo:influenced ?child1 .

?child1 dbo:influenced dbpedia:Friedrich_Nietzsche .

?child3 a dbo:Philosopher .

?child2 a dbo:Philosopher .

?child1 a dbo:Philosopher .

?child3 rdfs:label ?label3 .

?child3 dbp:birthDate ?birthDate3 .

FILTER (lang(?label3) = 'en') .

} ORDER BY DESC(?birthDate3)

The corresponding Cypher query is the following:

MATCH (s:ns0__Philosopher

)-[:INFLUENCED*3]->(nietzsche)

WHERE nietzsche.uri = 'http://dbpedia.org/resource/Friedrich_Nietzsche'

RETURN s

ORDER BY s.ns1__birthDate

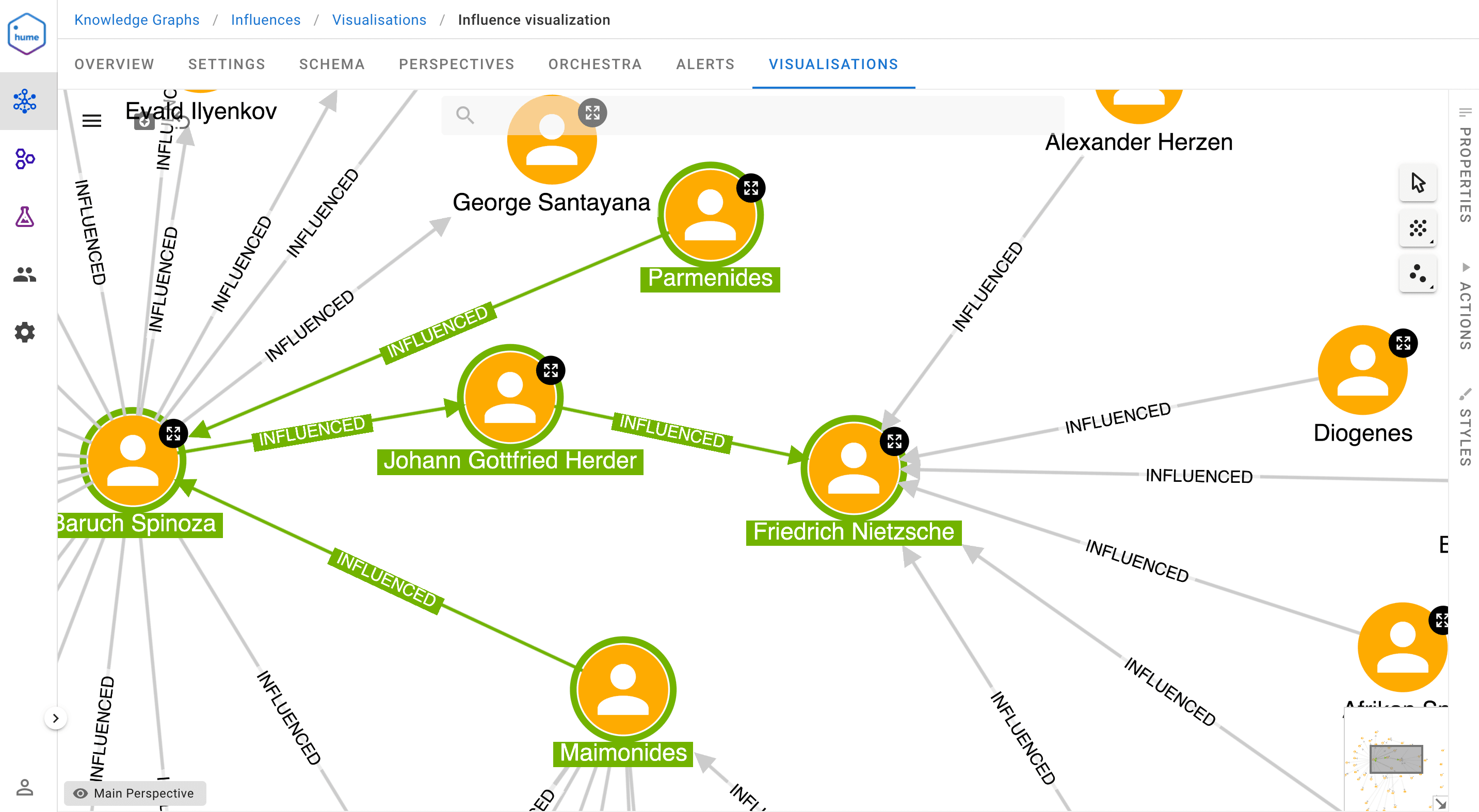

We can explore the results in the following Hume visualization (a subset of the results is visualized for clarity reasons):

As you can see from this example, the Cypher query is much more compact compared to the SPARQL query. The main reason is that SPARQL is not a graph traversal engine, and you have to specify all the hops explicitly, manipulating the statements. This aspect determines a crucial impact on the performance of the queries. Using SPARQL, the developer defines _how to _traverse the graph for retrieving the information that he needs. This determines that query execution strictly depends on the query content, which could be heavily inefficient if not structured or not ordered correctly.

On the other hand, the logic behind Cypher is defining what to do, specifying what data to retrieve in a way that is very close to how humans think about graph structures navigation. Therefore, the performance depends on the query engine implementation, directly computing the graph traversing policy. Another interesting element is that, in the SPARQL query, we have to specify the language of the labels using the following expression: FILTER (lang(?label3) = ‘en’). Regarding Cypher, we do not define the label language because it was already set up in the graph configuration procedure.

Impressions on Neosemantics

Starting from my personal experience with RDF, there have been many features of Neosemantics that I tested and others that I want to explore in the future. I found the graph configuration procedure very useful. As I mentioned before, this functionality allows applying transformations to the RDF graph, considering the user’s specific needs. Traditionally, a general-purpose RDF graph available on the Web provides multiple values for a single data property, for instance, rdfs:label. In such a case, we could have different labels in different languages. If the final user is interested only in the English label (without the language tag), you can set this behavior as a configuration parameter.

Another functional configuration is related to removing RDF namespaces, which I will further explore in the future. Namespaces are particularly useful when combining different ontologies covering the same domain to distinguish the origin of similar properties or classes. However, this feature risks creating only redundant information for enterprise KGs, usually built using a unique and private ontology.

I also appreciated the dynamic way to load and import RDF graphs into Neo4j and I thoroughly explored the fetching procedure from SPARQL results. However, users can also load RDF data from static files available on the Web or on their own system. I believe that these import policies cover all the situations in which the loading of a file is an issue and avoid writing glue code for importing data. An analogous principle is adopted by the Hume Orchestra component, which allows you to convert data from multiple and distributed sources (S3 buckets, Azure Blob Storage, third party APIs, RDBMS tables, etc.) into a single and connected source represented by the KG.

Another feature is the mapping between the RDF graph model and the LPG graph model. This feature allows mapping an RDF vocabulary into graph elements (labels, property names, relationship names) and keeps the vocabulary used in the two domains distinct. For instance, we can map the skos:narrower in RDF to the :CHILD relationship type in LPG. RDF experts are very familiar with the concept of mapping languages: standards have been created from the World Wide Web Consortium (W3C) to formalize the mapping between relational database schemas and the RDF representation (see: https://www.w3.org/TR/r2rml/).

To conclude, my general impression is that Neosemantics allows preserving all the features from the RDF domain. The high level of configurability will enable users to select a subset of these features based on their needs, exploiting at the same time all the LPG capabilities. As I showed in this article, these capabilities enable you to interact more transparently with the graph data structures. RDF statements can be directly treated as nodes, relationships, and properties of the graph, which can be explored leveraging the simplicity and the expressiveness of the Cypher language.