Iterating over large numbers of nodes using Cypher is quite a common use case in Neo4j. Typically, the reason for doing this is that we want to perform some kind of operation for each one of these nodes. In this blog post, we will use one million TestNodes and try to iterate over them in order to index their contents into a freshly created Elasticsearch index. There are three approaches we can take, two of which are quite common, but the most performant technique is largely unknown.

First Technique : SKIP and LIMIT

Using SKIP and LIMIT is the first approach that comes to mind, especially if you’re used to working with other databases. Let’s give it a try and see how it performs. Note that we’re using our php driver, but the query times (in ms) should be about the same in any language.

$offset = 0;

$limit = 1000;

do {

$q = 'MATCH (n:TestNode) WITH n SKIP {offset} LIMIT {limit} SET n.indexed = true RETURN n.id as id';

$result = $this->client->sendCypherQuery($q, ['offset' => $offset, 'limit' => $limit])

->getResult();

$count = count($result->get('id'));

$offset += $count;

} while($count > 0);

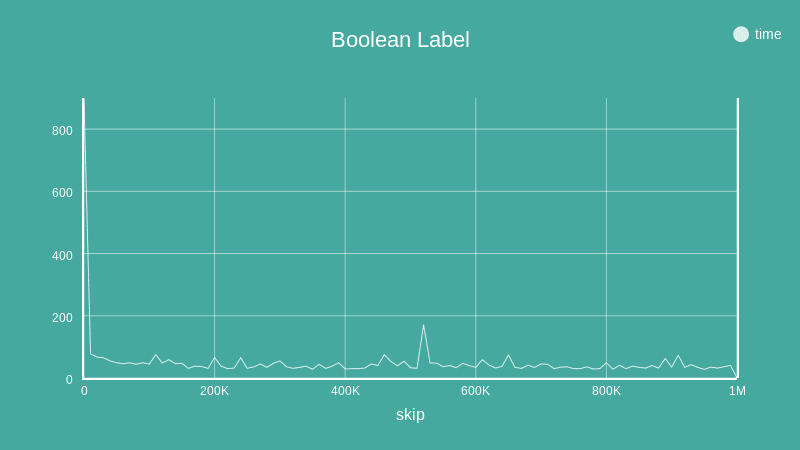

If we analyze the times taken to execute the queries, we’re not going to be impressed – the query times are growing linearly with the number of nodes we skip.

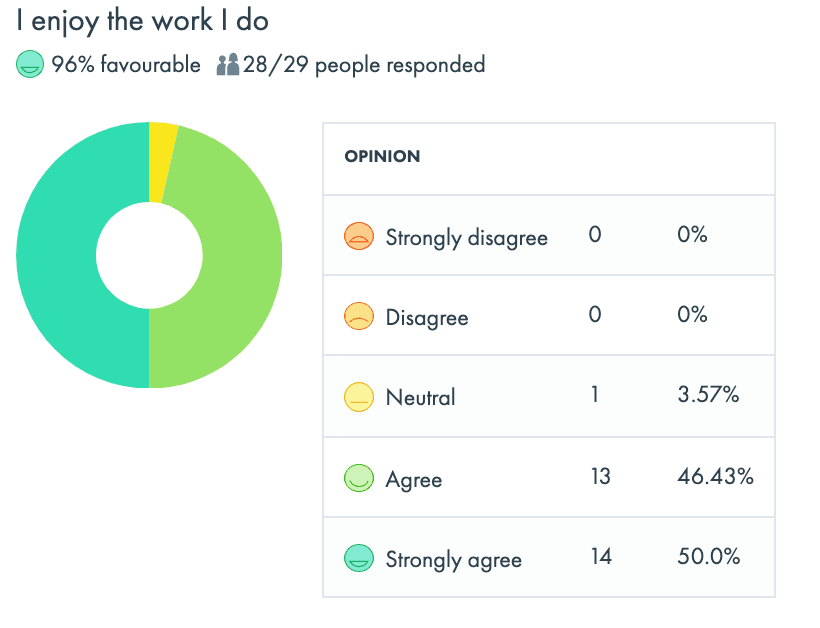

Let’s profile an intermediate query to see why this is the case:

PROFILE MATCH (n:TestNode) RETURN n.id as id SKIP 500000 LIMIT 1000

As we can see, all the SKIPped nodes we cause real database accesses, which is not really helpful in achieving good performance.

Second Technique : Indexed Property

The second approach is to create an index on a boolean property of a node. For each batch of nodes we have processed, we set the property to true:

CREATE INDEX ON :TestNode(flag)

do {

$q = 'MATCH (n:TestNode) WHERE NOT HAS(n.flag) WITH n LIMIT {limit} SET n.flag = true RETURN n.id as id';

$result = $this->client->sendCypherQuery($q, ['limit' => $limit])

->getResult();

$count = count($result->get('id'));

$offset += $count;

} while($count > 0);

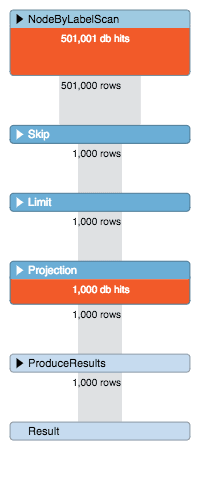

Not that much of an improvement when it comes to a large set of nodes. Let’s profile again:

We can see that when matching properties are found using the index, the database access count is equal to the number of nodes with those properties. Consequently, the query performance degrades over time, as more and more properties match the index lookup.

Third Technique : Boolean Labels

This technique is very similar to the previous one, except that we use a label to represent a boolean value. The presence of the label means true, its absence means false.

$limit = 1000;

do {

$q = 'MATCH (n:TestNode) WHERE NOT n:Flagged WITH n LIMIT {limit} SET n:Flagged RETURN n.id as id';

$result = $this->client->sendCypherQuery($q, ['limit' => $limit])

->getResult();

$count = count($result->get('id'));

$offset += $count;

} while($count > 0);

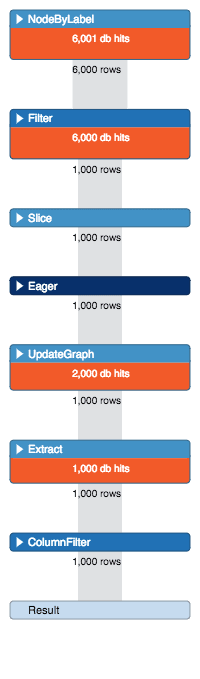

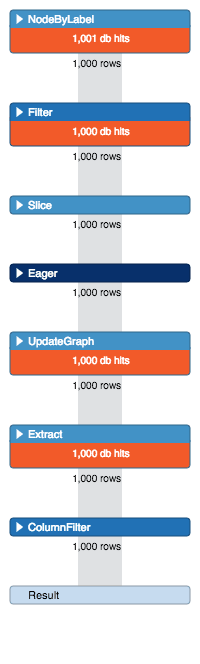

Wow, query times are an order of magnitude smaller and, perhaps more importantly, constant. Let’s quickly analyze the profile output:

The amount of database accesses per batch is 4 times the limit: one access per node label, one per filter on the second label, one per extraction of the node id property, and one per label update.

The next time you need to perform the same operation, the logical meaning of label presence can be inverted. In other words, the query can be inverted to matching on the “boolean” (WHERE instead of WHERE NOT) and remove it instead of adding it (REMOVE n:Flagged).

Conclusion

Using the PROFILE keyword, we’ve discovered bottlenecks in our Cypher queries and learned a new handy Cypher trick yet again. Please review our previous posts on the Cypher topic. And make sure you PROFILE your queries!