In our previous Recommendations with Neo4j and Graph-Aided Search post we introduced the concept of Graph Aided Search. It refers to a personalised user experience during search where the results are customised for each user based on information gathered about them (likes, friends, clicks, buying history, etc.). This information is stored in a graph database and processed using machine learning and/or graph analysis algorithms.

A simple example is the LinkedIn search functionality. If we were typing “Michal” in the text input, it would obviously return people where the name matches and order them by full text relevancy with some fuzziness:

Lucene-based search engines such as Elasticsearch and Solr offer impressive performance (near real-time) when it comes to fulltext search or aggregations. However, since they are aggregate oriented databases, they have limitations when it comes to connected data. Native graph databases can perform queries requiring traversals of depth three or more in social and other networks, or scoring based on inferred relationships (shortestPath for example) in a more performant way.

In Graph-Aided Search, with the help of the Neo4j graph database, graph traversals, and a machine learning engine built on top of it, you can filter, order and boost the results returned by a text search engine based on social network or interest predicates. Sticking to the LinkedIn example from earlier, you may want to boost the score of some results for the user performing the search if:

- The person in the result has a lot of friends in common with the user

- The person worked for the same company as someone who endorsed the user’s skills

- The skills in common between the user and the person in the search result have approximately the same number of endorsements

- The degree of separation between the person returned and the user is really low

You may also want to filter out results or degrade the score if:

- The similarity of interests is low (no groups in common, low number of skills in common)

- The search result has been ignored many times during the recommendation process (People you may know)

- The search result has been blocked by people already connected with the user doing the search

It is worth noting here that this is how GraphAware facilitates the transition from “impersonal/general purpose” search, which produces the same result for all the users performing the query, to “personalised” search, where user history, interests, and behaviour matter and influence the final score and results list. This is a radical change in the way of thinking about simple text search. Here, graph databases play an important role, providing an efficient and effective native graph store and path navigation facilities.

Some time after we introduced the Graph-Aided Search concept at GraphConnect San Francisco, we released a plugin named neo4j-to-elasticsearch providing near real-time replication of Transaction Event data to an Elasticsearch cluster.

We’re now delighted to open-source the missing part of the puzzle, an Elasticsearch plugin called Graph-Aided Search, which extends the search query DSL and offers a connection to a Neo4j database endpoint.

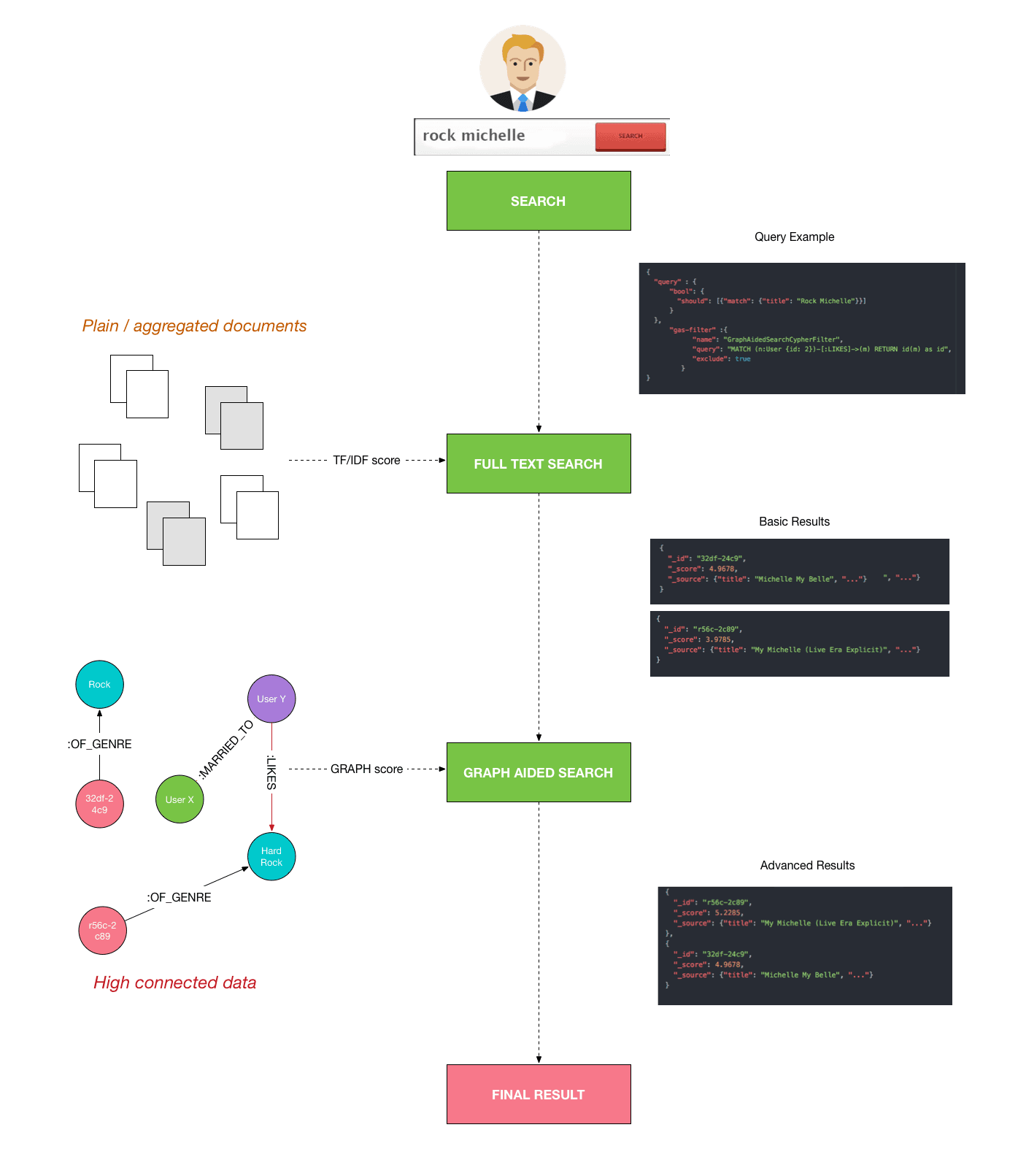

High Level Overview

The following image provides a 10,000 foot view of the entire workflow of the Graph-Aided Search process.

The plugin operates after the fulltext search stage, getting score/filtering information from graph data or some machine learning model built on top of it. Result scores are then composed, the resulting order is changeds and the final results are returned to the user.

Searching Documents

You will continue to search for documents in Elasticsearch with the Query DSL. The Graph-Aided Search plugin will intercept the query and the Search Hits returned by Elasticsearch.

Process the Elasticsearch Results with Neo4j

Before returning the results back to the user, you can define in the query the type of operation you want to execute with the partial results.

The types of operations currently supported are :

- Filtering : include or exclude a Search Hit

- Boosting : Search Hit score transformation

The operations are processed by application logic on the Neo4j side, for example, a recommendation engine endpoint built with neo4j-reco or reco4php, or simply by providing a Cypher query to be executed at the Neo4j Cypher transactional endpoint in the query DSL.

The Search Hit document IDs are passed along with the query as parameters, which is useful to do recommendations based on a constrained set of items.

The following listing is an example of a query matching movie documents in Elasticsearch and filtering items out if the user performing the search has already liked them.

{

"query" : {

"bool": {

"should": [{"match": {"title": "Toy Story"}}]

}

},

"gas-filter" : {

"name": "SearchResultCypherFilter",

"query": "MATCH (n:User {id: 2})-[:LIKES]->(m) RETURN m.objectId as id",

"exclude": true

}

}It is important to have a correlation between the document IDs in Elasticsearch and the corresponding nodes in Neo4j. Since using Neo4j’s internal node IDs as keys in third-party systems is considered a bad practice, we recommend using neo4j-to-elasticsearch with node uuids. This uuid (or other key property) will be used to identify documents on the Elasticsearch platform.

Processing the Neo4j Response

Once the type of operation chosen is returned by the graph endpoint, the plugin will transform the Search Hits scores or filter some results out.

Note that during the interception of the search query, the plugin will modify the default or user defined size of desired results in order to have a relevant set of documents to process.

Returning the Results to the User

The last step in the process is obviously returning the Search Hits back to the user with the corresponding modified scores.

Build Your First Graph-Aided Search Application

This section of the blog post is more technical and will guide you through the setup and configuration of the following architecture:

- an Elasticsearch cluster with the GraphAidedSearch plugin

- a running Neo4j database

- a Neo4j plugin for replicating Transaction Event data to Elasticsearch

- a Neo4j recommendation engine providing an endpoint for receiving the Elasticsearch result hits

- an example dataset for testing the search

This should take you up to 30 minutes. Note that both the Elasticsearch and the Neo4j plugins can run in standalone mode, but combined together, they offer a real framework for building powerful personalised search systems. For details about Elasticsearch plugin parameters, refer to the project README

Install and Configure Neo4j

First of all, download Neo4j Community Edition from the Neo4j site.

$ wget http://neo4j.com/artifact.php?name=neo4j-community-2.3.3-unix.tar.gzUncompress it wherever you prefer.

$ tar xvzf neo4j-community-2.3.3-unix.tar.gzInstall Neo4j Plugins

Move to the plugin directory:

$ cd neo4j-community-2.3.3/pluginsDownload the Graphaware Framework and Elasticsearch Plugin in the plugins directory (verify updated urls at https://products.graphaware.com/):

$ wget https://products.graphaware.com/download/framework-server-community/graphaware-server-community-all-2.3.3.37.jar

$ wget https://products.graphaware.com/download/neo4j-to-elasticsearch/graphaware-neo4j-to-elasticsearch-2.3.3.37.1.jarEdit the Neo4j configuration file

$ cd ..

$ vi conf/neo4j-server.propertiesAnd add the following lines:

#Enable Framework

org.neo4j.server.thirdparty_jaxrs_classes=com.graphaware.server=/graphaware

com.graphaware.runtime.enabled=true

#ES becomes the module ID:

com.graphaware.module.ES.1=com.graphaware.module.es.ElasticSearchModuleBootstrapper

#URI of Elasticsearch

com.graphaware.module.ES.uri=localhost

#Port of Elasticsearch

com.graphaware.module.ES.port=9200

#optional, Elasticsearch index name, default is neo4j-index

com.graphaware.module.ES.index=neo4j-index

#optional, node property key of a propery that is used as unique identifier of the node. Must be the same as com.graphaware.module.UIDM.uuidProperty, defaults to uuid

com.graphaware.module.ES.keyProperty=objectId

#optional, size of the in-memory queue that queues up operations to be synchronised to Elasticsearch, defaults to 10000

com.graphaware.module.ES.queueSize=100Now Neo4j is ready and can be started

$ ./bin/neo4j startCheck the logs to see if everything is ok. The logs should contain lines such as the following:

$ tail -n1000 -f data/log/console.log

2016-04-05 23:48:08.306+0200 INFO Successfully started database

2016-04-05 23:48:08.446+0200 INFO Starting HTTP on port 7474 (4 threads available)

2016-04-05 23:48:09.239+0200 INFO Enabling HTTPS on port 7473

2016-04-05 23:48:09.357+0200 INFO Loaded server plugin "SpatialPlugin"

2016-04-05 23:48:09.367+0200 INFO GraphDatabaseService.addSimplePointLayer: add a new layer specialized at storing simple point location data

2016-04-05 23:48:09.367+0200 INFO GraphDatabaseService.addNodesToLayer: adds many geometry nodes (about 10k-50k) to a layer, as long as the nodes contain the geometry information appropriate to this layer.

2016-04-05 23:48:09.367+0200 INFO GraphDatabaseService.findClosestGeometries: search a layer for the closest geometries and return them.

2016-04-05 23:48:09.367+0200 INFO GraphDatabaseService.addGeometryWKTToLayer: add a geometry specified in WKT format to a layer, encoding in the specified layers encoding schemea.

2016-04-05 23:48:09.367+0200 INFO GraphDatabaseService.addEditableLayer: add a new layer specialized at storing generic geometry data in WKB

2016-04-05 23:48:09.367+0200 INFO GraphDatabaseService.findGeometriesWithinDistance: search a layer for geometries within a distance of a point. To achieve more complex CQL searches, pre-define the dynamic layer with addCQLDynamicLayer.

2016-04-05 23:48:09.368+0200 INFO GraphDatabaseService.addCQLDynamicLayer: add a new dynamic layer exposing a filtered view of an existing layer

2016-04-05 23:48:09.368+0200 INFO GraphDatabaseService.addNodeToLayer: add a geometry node to a layer, as long as the node contains the geometry information appropriate to this layer.

2016-04-05 23:48:09.368+0200 INFO GraphDatabaseService.getLayer: find an existing layer

2016-04-05 23:48:09.368+0200 INFO GraphDatabaseService.findGeometriesInBBox: search a layer for geometries in a bounding box. To achieve more complex CQL searches, pre-define the dynamic layer with addCQLDynamicLayer.

2016-04-05 23:48:09.368+0200 INFO GraphDatabaseService.updateGeometryFromWKT: update an existing geometry specified in WKT format. The layer must already contain the record.

2016-04-05 23:48:09.407+0200 INFO Mounted unmanaged extension [com.graphaware.server] at [/graphaware]

2016-04-05 23:48:09.644+0200 INFO Mounting static content at /webadmin

2016-04-05 23:48:09.798+0200 INFO Mounting static content at /browser

23:48:10.681 [main] INFO c.g.s.f.b.GraphAwareBootstrappingFilter - Mounting GraphAware Framework at /graphaware

23:48:10.785 [main] INFO c.g.s.f.c.GraphAwareWebContextCreator - Will try to scan the following packages: {com.**.graphaware.**,org.**.graphaware.**,net.**.graphaware.**}

23:48:14.055 [main] DEBUG c.g.s.t.LongRunningTransactionFilter - Initializing com.graphaware.server.tx.LongRunningTransaction

23:48:14.085 [main] DEBUG c.g.s.t.LongRunningTransactionFilter - Initializing com.graphaware.server.tx.LongRunningTransaction

23:48:14.112 [main] DEBUG c.g.s.t.LongRunningTransactionFilter - Initializing com.graphaware.server.tx.LongRunningTransaction

23:48:15.233 [main] DEBUG c.g.s.t.LongRunningTransactionFilter - Initializing com.graphaware.server.tx.LongRunningTransaction

23:48:15.405 [main] DEBUG c.g.s.t.LongRunningTransactionFilter - Initializing com.graphaware.server.tx.LongRunningTransaction

23:48:15.642 [main] DEBUG c.g.s.t.LongRunningTransactionFilter - Initializing com.graphaware.server.tx.LongRunningTransaction

23:48:15.642 [main] DEBUG c.g.s.t.LongRunningTransactionFilter - Initializing com.graphaware.server.tx.LongRunningTransaction

2016-04-05 23:48:16.041+0200 INFO Remote interface ready and available at http://localhost:7474/Install and Configure Elasticsearch

Move to a different directory and download the Elasticsearch software from the Elastic site.

$ wget https://download.elasticsearch.org/elasticsearch/release/org/elasticsearch/distribution/tar/elasticsearch/2.2.2/elasticsearch-2.2.2.tar.gzUncompress it wherever you prefer.

$ tar xvzf elasticsearch-2.2.2.tar.gz

$ cd elasticsearch-2.2.2Install the Graph-Aided Search plugin and start Elasticsearch in daemon mode. During plugin installation will be required to authorise security, you need to accept to get the plugin working.

$ ./bin/plugin install com.graphaware.es/graph-aided-search/2.2.2.0

$ ./bin/elasticsearch -d

The logs should look like the following:

[2016-04-05 23:52:57,982][INFO ][node ] [Alex Power] version[2.2.1], pid[24807], build[d045fc2/2016-03-09T09:38:54Z]

[2016-04-05 23:52:57,983][INFO ][node ] [Alex Power] initializing ...

[2016-04-05 23:52:59,753][INFO ][plugins ] [Alex Power] modules [lang-expression, lang-groovy], plugins [graph-aided-search], sites []

[2016-04-05 23:52:59,866][INFO ][env ] [Alex Power] using [1] data paths, mounts [[/ (/dev/disk1)]], net usable_space [272.5gb], net total_space [464.7gb], spins? [unknown], types [hfs]

[2016-04-05 23:52:59,866][INFO ][env ] [Alex Power] heap size [990.7mb], compressed ordinary object pointers [true]

[2016-04-05 23:52:59,868][WARN ][env ] [Alex Power] max file descriptors [10240] for elasticsearch process likely too low, consider increasing to at least [65536]

[2016-04-05 23:53:06,365][INFO ][node ] [Alex Power] initialized

[2016-04-05 23:53:06,366][INFO ][node ] [Alex Power] starting ...

[2016-04-05 23:53:06,542][INFO ][transport ] [Alex Power] publish_address {127.0.0.1:9300}, bound_addresses {[fe80::1]:9300}, {[::1]:9300}, {127.0.0.1:9300}

[2016-04-05 23:53:06,556][INFO ][discovery ] [Alex Power] elasticsearch/T5RXoZ-LTg-k7bP2tFEAyA

[2016-04-05 23:53:09,603][INFO ][cluster.service ] [Alex Power] new_master {Alex Power}{T5RXoZ-LTg-k7bP2tFEAyA}{127.0.0.1}{127.0.0.1:9300}, reason: zen-disco-join(elected_as_master, [0] joins received)

[2016-04-05 23:53:09,619][INFO ][http ] [Alex Power] publish_address {127.0.0.1:9200}, bound_addresses {[fe80::1]:9200}, {[::1]:9200}, {127.0.0.1:9200}

[2016-04-05 23:53:09,620][INFO ][node ] [Alex Power] started

[2016-04-05 23:53:09,715][INFO ][gateway ] [Alex Power] recovered [1] indices into cluster_stateNote the third line where there is the list of plugins loaded at startup.

Configure Graph-Aided Search by defining the URL of the Neo4j server instance and enabling it.

$ curl -XPUT "http://localhost:9200/neo4j-index/_settings?index.gas.neo4j.hostname=http://localhost:7474&index.gas.enable=true"Download and Import Data

Now we will import some data about movies and user likes from the popular GroupLens dataset resources site

From the Neo4j server home, move to import directory and download the data source

$ cd data/import

$ wget http://files.grouplens.org/datasets/movielens/ml-100k.zip

$ unzip ml-100k.zipIn neo4j.properties, uncomment the following line

dbms.security.load_csv_file_url_root=data/importRestart the neo4j database

$ ./bin/neo4j restartNow the database is ready to start ingestion. Open the Neo4j shell.

$ ./bin/neo4j-shellStart the import (some of the operations can take a while)

CREATE CONSTRAINT ON (n:Movie) ASSERT n.objectId IS UNIQUE;

CREATE CONSTRAINT ON (n:User) ASSERT n.objectId IS UNIQUE;

USING PERIODIC COMMIT 500

LOAD CSV FROM "file:///ml-100k/u.user" AS line FIELDTERMINATOR '|'

CREATE (:User {objectId: toInt(line[0]), age: toInt(line[1]), gender: line[2], occupation: line[3]});

USING PERIODIC COMMIT 500

LOAD CSV FROM "file:///ml-100k/u.item" AS line FIELDTERMINATOR '|'

CREATE (:Movie {objectId: toInt(line[0]), title: line[1], date: line[2], imdblink: line[4]});Check the ElasticSearch indices status

$ curl -XGET http://localhost:9200/_cat/indices

#yellow open neo4j-index 5 1 2625 0 475.3kb 475.3kb

$ curl -XGET http://localhost:9200/neo4j-index/User/_search

$ curl -XGET http://localhost:9200/neo4j-index/Movie/_searchReopen the neo4j-shell and start importing the relationships (this operation is the longest)

# In the neo4j-shell

USING PERIODIC COMMIT 500

LOAD CSV FROM "file:///ml-100k/u.data" AS line FIELDTERMINATOR '\t'

MATCH (u:User {objectId: toInt(line[1])})

MATCH (p:Movie {objectId: toInt(line[0])})

CREATE UNIQUE (u)-[:LIKES {rate: ROUND(toFloat(line[2])), timestamp: line[3]}]->(p);Start Playing with Graph-Aided Search

Now that everything is in place, we can try a simple query, searching for movies with the word “love” in the title:

curl -X POST http://localhost:9200/neo4j-index/Movie/_search -d '{

"query" : {

"bool": {

"should": [{"match": {"title": "love"}}]

}

}

}';The results will resemble the following listing:

{

"_shards": {

"failed": 0,

"successful": 5,

"total": 5

},

"hits": {

"hits": [

{

"_id": "1297",

"_index": "neo4j-index",

"_score": 2.9627202,

"_source": {

"date": "01-Jan-1994",

"imdblink": "http://us.imdb.com/M/title-exact?Love%20Affair%20(1994)",

"objectId": "1297",

"title": "Love Affair (1994)"

},

"_type": "Movie"

},

{

"_id": "1446",

"_index": "neo4j-index",

"_score": 2.919132,

"_source": {

"date": "01-Jan-1995",

"imdblink": "http://us.imdb.com/M/title-exact?Bye%20Bye,%20Love%20(1995)",

"objectId": "1446",

"title": "Bye Bye, Love (1995)"

},

"_type": "Movie"

},

[...]

],

"max_score": 2.9627202,

"total": 29

},

"timed_out": false,

"took": 6

}We get a lot of results, now we can use a filter to show only the films evaluated positively by people:

curl -X POST http://localhost:9200/neo4j-index/Movie/_search -d '{

"query" : {

"bool": {

"should": [{"match": {"title": "love"}}]

}

},

"gas-filter" :{

"name": "SearchResultCypherFilter",

"query": "MATCH (n:User)-[r:LIKES]->(m) WITH m, avg(r.rate) as avg_rate where avg_rate < 3 RETURN m.objectId as id",

"exclude": true,

"keyProperty": "objectId"

}

}'Now the results are:

{

"_shards": {

"failed": 0,

"successful": 5,

"total": 5

},

"hits": {

"hits": [

{

"_id": "1160",

"_index": "neo4j-index",

"_score": 2.6663055,

"_source": {

"date": "16-May-1997",

"imdblink": "http://us.imdb.com/Title?Love%21+Valour%21+Compassion%21+(1997)",

"objectId": "1160",

"title": "Love! Valour! Compassion! (1997)"

},

"_type": "Movie"

},

{

"_id": "1180",

"_index": "neo4j-index",

"_score": 2.57552,

"_source": {

"date": "01-Jan-1994",

"imdblink": "http://us.imdb.com/M/title-exact?I%20Love%20Trouble%20(1994)",

"objectId": "1180",

"title": "I Love Trouble (1994)"

},

"_type": "Movie"

},

{

"_id": "474",

"_index": "neo4j-index",

"_score": 1.28776,

"_source": {

"date": "01-Jan-1963",

"imdblink": "http://us.imdb.com/M/title-exact?Dr.%20Strangelove%20or:%20How%20I%20Learned%20to%20Stop%20Worrying%20and%20Love%20the%20Bomb%20(1963)",

"objectId": "474",

"title": "Dr. Strangelove or: How I Learned to Stop Worrying and Love the Bomb (1963)"

},

"_type": "Movie"

}

],

"max_score": 2.9627202,

"total": 29

},

"timed_out": false,

"took": 243

}In this case no score is changed only the results list is different since some results are filtered out. These are really interesting results, but they are still generic. We can make them more personalised using a more complex query which excludes movies already liked by the user:

curl -X POST http://localhost:9200/neo4j-index/Movie/_search -d '{

"query" : {

"bool": {

"should": [{"match": {"title": "love"}}]

}

},

"gas-filter" :{

"name": "SearchResultCypherFilter",

"query": "MATCH (n:User {objectId: 12})-[r:LIKES]->(m) RETURN m.objectId as id",

"exclude": true,

"keyProperty": "objectId"

}

}'The :User {objectId: 12} should be computed at the application level, it is representing the actual user performing the search.

In this case the results are:

{

"_shards": {

"failed": 0,

"successful": 5,

"total": 5

},

"hits": {

"hits": [

{

"_id": "1160",

"_index": "neo4j-index",

"_score": 2.6663055,

"_source": {

"date": "16-May-1997",

"imdblink": "http://us.imdb.com/Title?Love%21+Valour%21+Compassion%21+(1997)",

"objectId": "1160",

"title": "Love! Valour! Compassion! (1997)"

},

"_type": "Movie"

},

{

"_id": "1180",

"_index": "neo4j-index",

"_score": 2.57552,

"_source": {

"date": "01-Jan-1994",

"imdblink": "http://us.imdb.com/M/title-exact?I%20Love%20Trouble%20(1994)",

"objectId": "1180",

"title": "I Love Trouble (1994)"

},

"_type": "Movie"

},

{

"_id": "1457",

"_index": "neo4j-index",

"_score": 1.8267004,

"_source": {

"date": "11-Oct-1996",

"imdblink": "http://us.imdb.com/M/title-exact?Love%20Is%20All%20There%20Is%20(1996)",

"objectId": "1457",

"title": "Love Is All There Is (1996)"

},

"_type": "Movie"

},

[...]

],

"max_score": 2.9627202,

"total": 26

},

"timed_out": false,

"took": 129

}The same Elasticsearch query produces different results thanks to our filters. This is one of the use cases which operates only by filtering results. Other interesting ones would be changing the score based on user interests or preferences. This can be done in two different ways: using a cypher query or using a recommendation engine. The first will be presented in this paragraph.

We can realise a real user based collaborative filter recommender using a Cypher query. For example, in this query, we compute Tanimoto Distance between the target user and all the other users, then we use this distance to predict interests of the user for all the unseen movies. In the next example we are using this score to boost the results of movies that contain “love” in the title.

curl -X POST http://localhost:9200/neo4j-index/Movie/_search -d '{

"size": 3,

"query" : {

"bool": {

"should": [{"match": {"title": "love"}}]

}

},

"gas-booster" :{

"name": "SearchResultCypherBooster",

"query": "MATCH (input:User) WHERE id(input) = 2

MATCH (input)-[r:LIKES]->(totalMovie)

WITH input, count(totalMovie) as totalUserMovieCount

MATCH (input)-[:LIKES]->(movie)<-[r2:LIKES]-(other)

WITH input, other, totalUserMovieCount, count(movie) as commonMovieCount

MATCH (other)-[:LIKES]->(otherMovie)

WITH input, other, totalUserMovieCount, commonMovieCount, count(otherMovie) as otherMovieCount

WITH input, other, commonMovieCount*1.0/(totalUserMovieCount + otherMovieCount - commonMovieCount) as tonimotoDinstance

MATCH (predictedMovie:Movie)

WHERE NOT (input)-[:LIKES]->(predictedMovie)

WITH other, tonimotoDinstance, predictedMovie

MATCH (other)-[or:LIKES]->(predictedMovie)

WITH other, predictedMovie, tonimotoDinstance*or.rate as weightedRatingSum, tonimotoDinstance as similarity

WITH predictedMovie, sum(similarity) as simSum, sum(weightedRatingSum) as wSum

RETURN predictedMovie.objectId as objectId, CASE simSum WHEN 0 then 0 else wSum/simSum END as predRating",

"identifier": "objectId",

"scoreName": "predRating"

}

}'Here are the results:

{

"_shards": {

"failed": 0,

"successful": 5,

"total": 5

},

"hits": {

"hits": [

{

"_id": "535",

"_index": "neo4j-index",

"_score": 10.486974,

"_source": {

"date": "23-May-1997",

"imdblink": "http://us.imdb.com/M/title-exact?Addicted%20to%20Love%20%281997%29",

"objectId": "535",

"title": "Addicted to Love (1997)"

},

"_type": "Movie"

},

{

"_id": "139",

"_index": "neo4j-index",

"_score": 10.25477,

"_source": {

"date": "01-Jan-1969",

"imdblink": "http://us.imdb.com/M/title-exact?Love%20Bug,%20The%20(1969)",

"objectId": "139",

"title": "Love Bug, The (1969)"

},

"_type": "Movie"

},

{

"_id": "36",

"_index": "neo4j-index",

"_score": 8.61382,

"_source": {

"date": "01-Jan-1995",

"imdblink": "http://us.imdb.com/M/title-exact?Mad%20Love%20(1995)",

"objectId": "36",

"title": "Mad Love (1995)"

},

"_type": "Movie"

}

],

"max_score": 10.486974,

"total": 29

},

"timed_out": false,

"took": 2098

}These results are really awesome, since we were able to create a Graph-Aided Search without writing a single line of code. Obviously, this approach is a powerful tool during testing and discovering phase but it will not scale. As you can see from the json returned, the time for providing response is quite high. In the next section, we present a different approach.

Moving Forward: Create a Recommendation Engine

A different and more scalable approach requires implementing a recommendation plugin for Neo4j that can be used for boosting results. This is a step by step guide for creating a plugin that uses the Graphaware Framework as backend engine. The complete source code is available here

Create a new Maven Project

Create a new maven project and in the dependency list in the pom.xml add the following artifacts.

<dependency>

<groupId>org.neo4j</groupId>

<artifactId>neo4j</artifactId>

<version>${neo4j.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>com.graphaware.neo4j</groupId>

<artifactId>common</artifactId>

<version>${graphaware.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>com.graphaware.neo4j</groupId>

<artifactId>api</artifactId>

<version>${graphaware.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>com.graphaware.neo4j</groupId>

<artifactId>recommendation-engine</artifactId>

<version>${graphaware.reco.version}</version>

</dependency>Recommendation Engine

First of all we need a recommendation engine, so we need to create a new subclass of Neo4jTopLevelDelegatingRecommendationEngine overriding name and engines. The latter is showed here:

@Override

protected List<RecommendationEngine<Node, Node>> engines() {

final CypherEngine cypherEngine = new CypherEngine("reco",

"MATCH (input:User) WHERE id(input) = {id}\n"

+ "MATCH p=(input)-[r:LIKES]->(movie)<-[r2:LIKES]-(other)\n"

+ "WITH other, collect(p) as paths\n"

+ "WITH other, reduce(x=0, p in paths | x + reduce(i=0, r in rels(p) | i+r.rating)) as score\n"

+ "WITH other, score\n"

+ "ORDER BY score DESC\n"

+ "MATCH (other)-[:LIKES]->(reco)\n"

+ "RETURN reco\n"

+ "LIMIT 500");

return Arrays.<RecommendationEngine<Node, Node>>asList(cypherEngine);

}Recommendation Controller

At this point we need a REST endpoint to be contacted by the Elasticsearch plugin. So we create a new controller RecommendationController that exposes this endpoint.

@RequestMapping(value = "/recommendation/movie/filter/{userId}", method = RequestMethod.POST)

@ResponseBody

public List<RecommendationReduced> filter(@PathVariable long userId, @RequestParam("ids") String[] ids, @RequestParam(defaultValue = "10") int limit, @RequestParam("keyProperty") String keyProperty, @RequestParam(defaultValue = "") String config) {

try (Transaction tx = database.beginTx()) {

final List<Recommendation<Node>> recommendations = recoEngine.recommend(findUserById(userId), parser.produceConfig(limit, config));

return convert(recommendations, ids, keyProperty);

}

}RecommendationReduced describes the results returned to the requester. It must produce the following structure in json:

[

{

"nodeId": 1212,

"objectId": "270",

"score": 3

},

{

"nodeId": 1041,

"objectId": "99",

"score": 1

},

{

"nodeId": 1420,

"objectId": "478",

"score": 1

},

{

"nodeId": 1428,

"objectId": "486",

"score": 1

}

]So you need to create the following class:

public class RecommendationReduced {

private long nodeId;

private String objectId;

private float score;

public RecommendationReduced() {

}

public RecommendationReduced(long nodeId, long objectId, float score) {

this.objectId = String.valueOf(objectId);

this.score = score;

this.nodeId = nodeId;

}

public String getObjectId() {

return objectId;

}

public void setObjectId(String objectId) {

this.objectId = objectId;

}

public long getNodeId() {

return nodeId;

}

public float getScore() {

return score;

}

public void setScore(float score) {

this.score = score;

}

}At this point we can compile and copy the target jar (named recommender-0.0.1-SNAPSHOT-jar-with-dependencies.jar) into the plugins directory of Neo4j and restart it.

$ cp target/... $NEO4J_HOME/plugins

$ cd $NEO4J_HOME

$ ./bin/neo4j restartFinally, we can use it in the Elasticsearch plugin. Here an example of using it in a search query using the GraphAidedSearchNeo4jBooster:

curl -X POST http://localhost:9200/neo4j-index/Movie/_search -d '{

"query" : {

"match_all" : {}

},

"gas-booster" :{

"name": "SearchResultNeo4jBooster",

"target": "2",

"maxResultSize": 10,

"keyProperty": "objectId",

"neo4j.endpoint": "/graphaware/recommendation/movie/filter/"

}

}';The endpoint matches the definition of the REST endpoint in our controller implementation.

Conclusion

The combination of these three amazing technologies – Neo4j, Elasticsearch, and GraphAware – offers you the ability to create competitive search engines for your applications. The only limitation is your imagination.