The AI assistant Maestro enables intelligence analysts to leverage the power of large language models (LLMs) without compromising data security.

GraphAware Hume has introduced a significant new feature in its 2.26 release called Maestro, which lays the groundwork for empowering intelligence analysts through the use of large language models (LLMs).

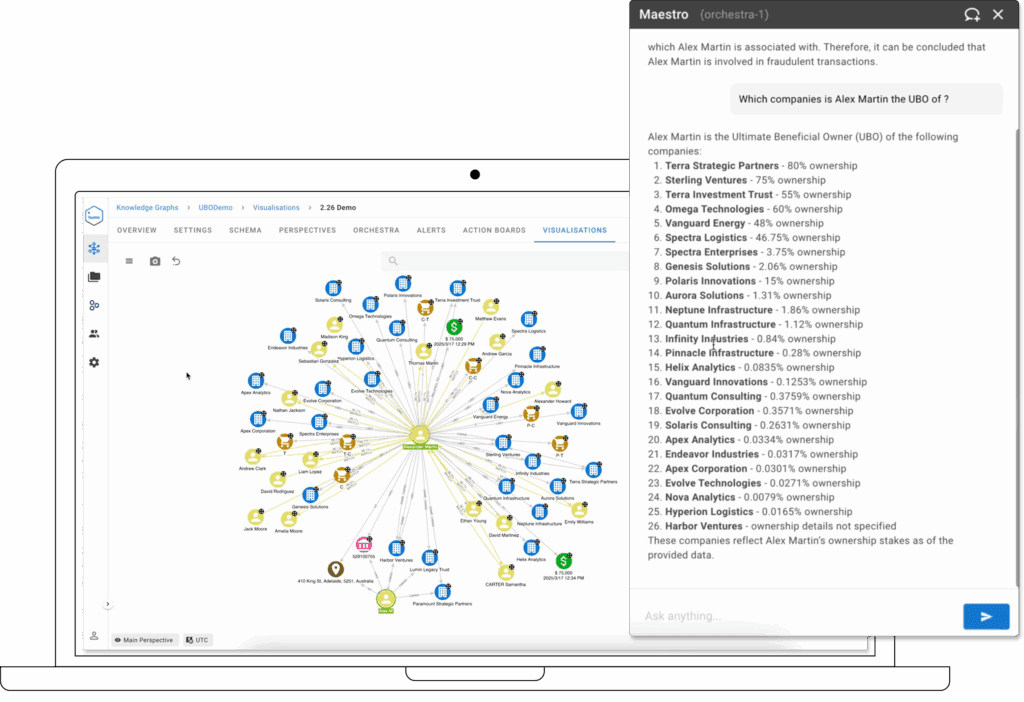

The concept of Maestro includes an interface that will allow analysts to explore knowledge graphs through natural language questions instead of queries, to uncover new insights, generate code snippets, summarise important facts or get in-depth contextual help while working with GraphAware Hume. In the recently introduced Beta version of Maestro, analysts can already access some of these capabilities while others will follow with the upcoming release of GraphAware Hume.

“Our vision is really to empower analysts to efficiently do the work they excel at. With Maestro, they don’t need to spend time on routine tasks that the machine can do for them,” explains Christophe Willemsen, Chief Technology Officer at GraphAware. He adds that LLM-based systems introduce new security challenges, and the team takes every precaution to protect client data and mitigate hallucinations. Graphs and LLMs are a natural combination for retrieving accurate answers: the structured information in the graphs provide a framework for LLMs to keep their answers in line with the data.

See Hume Maestro in Action During Our Upcoming Webinar

Be among the first to explore an exciting new chapter in intelligence analysis. In this exclusive webinar, we’ll unveil Hume Maestro (Beta), the new AI assistant in GraphAware Hume, designed to make analysts’ work more efficient, intuitive, and focused.

Register NowSecurity is a priority

Due to the sensitive nature of the work of law enforcement and intelligence agencies, the primary focus when designing Hume Maestro has been security. “GraphAware Hume is designed with a tight focus on access control and security in mind and Maestro conforms to that vision,” adds C. Willemsen. Even if using LLM to query data in the knowledge graph, the users will not be able to get to the data they shouldn’t see.

At the same time, confidential data from the organisation will not be leaked through prompts to the online LLMs – a significant worry for agencies working with sensitive information. “We will be soon employing PII identification and anonymisation techniques to comply with security requirements of our clients,” C. Willemsen explains.

Confidence in the answers from an LLM is crucial, so a lot of care has been taken to prevent and avoid the tendency of LLM models to hallucinate results. It’s a known fact that LLMs are eager to provide positive answers even if it means they make it up. This tendency can be taken care of at the prompt engineering stage so that Maestro doesn’t provide made up answers.

AI Assistant for Hume

Maestro adds the ability to directly interact with the LLMs of the client’s choice. Both online (OpenAI and Azure) and on-premise models (Ollama) are already supported. Other LLMs are easy to add, including internal models trained and hosted by the client.

In its current version, Hume Maestro already contains the documentation of GraphAware Hume, helping the analysts quickly check the specifics for the function or feature they want to use, including specific code suggestions when designing the layout of Action Boards or Python scripts and Cypher queries. All the usual LLM features such as summarisation and translation of content are of course available as well.

The clients will be able to add their own analytical and other documentation as well, having all the necessary organisational reference at hand at all times. Maestro will help produce a high level report on the current state of an ongoing analysis. Producing reports is a time-consuming task for analysts and the LLMs are capable of doing most of the heavy lifting.

Talk to your data

The activity where the AI hallucinations would hurt the most is interacting with a knowledge graph. Maestro now provides a chat interface that allows users to interact with their data using natural language, without needing to write queries. This is made possible by integrating Hume Maestro with a custom Hume Orchestra workflow that connects to external systems, such as large language models (LLMs), to interpret and respond to user questions.

It is important to note that this capability is not available out of the box. While it can be configured by users—typically using a graphRAG approach —it requires building custom workflows in Hume Orchestra. This is not yet a native product feature.

That said, even in its current Beta stage, Maestro enables non-technical users—such as police officers or frontline investigators—to access intelligence by asking questions in plain language. With the right setup, they can retrieve information about suspects, vehicles, or addresses within seconds, surfacing key relationships and patterns from large knowledge graphs—without needing to know or write query language.