In today’s data-driven world, organizations are drowning in information yet starving for insights. The problem isn’t the lack of data—it’s the inability to connect, integrate, and make sense of disparate data sources scattered across systems, departments, and organizations. Enter linked data, a revolutionary approach that transforms isolated data silos into interconnected knowledge networks, enabling unprecedented levels of data discovery, integration, and intelligence.

What is Linked Data?

Before diving into the intricacies of linked data, it’s essential to establish a solid foundation by understanding what data means in our modern context and how linked data revolutionizes traditional data management approaches.

What is Data?

Definition of Data:

Data represents facts, observations, measurements, or descriptions that can be processed, analyzed, and interpreted to generate meaningful information. In its raw form, data consists of individual elements such as numbers, text, images, or multimedia content that, when properly structured and contextualized, become valuable business assets.

Types of Data:

Modern organizations work with various data types, including structured data (organized in databases with defined schemas), semi-structured data (XML, JSON files with some organizational properties), and unstructured data (emails, documents, social media posts). Each type presents unique challenges for integration, analysis, and utilization across different systems and applications.

The Importance of Data Management:

Effective data management ensures data quality, accessibility, security, and compliance while enabling organizations to extract maximum value from their information assets. Poor data management leads to inconsistencies, duplication, security vulnerabilities, and missed opportunities for innovation and competitive advantage.

Defining Linked Data: Connecting the Dots

The core concept:

Linked data represents a paradigm shift from traditional data storage and management approaches by creating explicit connections between related data elements across different sources, systems, and organizations. Unlike conventional databases where relationships are implicit or limited to specific applications, linked data makes connections explicit, machine-readable, and globally accessible through web standards.

This approach transforms isolated data islands into interconnected knowledge networks where information flows seamlessly between related concepts, entities, and domains. For example, a customer record in a CRM system can directly link to product information, purchase history, support tickets, and even external data sources like social media profiles or industry databases.

The Semantic Web vision:

Linked data serves as the foundation for the Semantic Web, Tim Berners-Lee’s vision of a web where machines can understand and process information as effectively as humans. This vision extends beyond simple data storage to create a global knowledge graph where computers can reason about relationships, infer new knowledge, and provide intelligent responses to complex queries.

The Semantic Web enables applications to automatically discover relevant information, understand context and meaning, and make intelligent decisions based on interconnected data relationships. This capability transforms how we interact with information, moving from keyword-based searches to context-aware, intelligent information discovery and processing.

Why Linked Data matters:

Linked data addresses critical challenges in modern data management by improving data integration across heterogeneous systems, enhancing discoverability through semantic relationships, and enabling unprecedented levels of interoperability between different platforms, applications, and organizations.

Organizations implementing linked data strategies report significant improvements in data quality, reduced integration costs, faster time-to-insight, and enhanced ability to discover hidden patterns and relationships within their data assets. These benefits translate directly into competitive advantages, improved customer experiences, and new revenue opportunities.

Linked Data vs. Traditional Data: Key Differences

Structured vs. Unstructured Data:

Traditional data management systems excel at handling structured data with predefined schemas but struggle with unstructured or semi-structured information. Linked data approaches treat all information as interconnected resources, regardless of their original format or structure, enabling unified access and analysis across diverse data types.

Relational Databases vs. Graph Databases:

While relational databases organize information in tables with foreign key relationships, linked data utilizes graph-based models where entities and relationships are first-class citizens. This fundamental difference enables more natural representation of complex, interconnected information and supports flexible querying patterns that would be difficult or impossible in traditional SQL databases.

Graph-based approaches also eliminate many of the limitations inherent in relational models, such as the need for complex joins, rigid schema requirements, and difficulty representing many-to-many relationships. This flexibility enables organizations to adapt their data models as business requirements evolve without costly database restructuring projects.

The power of relationships:

Traditional databases treat relationships as secondary concerns, implemented through foreign keys and join operations. Linked data elevates relationships to equal status with entities, enabling rich, semantically meaningful connections that can be traversed, queried, and analyzed as easily as the data they connect.

Actionable Insight:

Understanding the fundamental differences between linked data and traditional data management approaches is crucial for organizations considering digital transformation initiatives. Linked data offers superior flexibility, integration capabilities, and semantic richness, making it particularly valuable for complex, interconnected business scenarios where traditional approaches fall short.

Key Components of Linked Data: Building Blocks

Understanding the technical components that enable linked data is essential for anyone looking to implement or work with linked data systems. These components work together to create the infrastructure necessary for publishing, discovering, and utilizing interconnected data resources.

URIs (Uniform Resource Identifiers): The Foundation of Identity

What are URIs and why are they important?

URIs serve as the fundamental addressing mechanism for linked data, providing globally unique identifiers for every entity, concept, or resource. Unlike traditional database keys that are only meaningful within specific systems, URIs enable global identification and reference, making it possible to unambiguously refer to resources across different applications, organizations, and domains.

The importance of URIs extends beyond simple identification. They enable the creation of distributed data networks where resources can be referenced and linked regardless of their physical location or the systems that manage them. This capability is fundamental to the web-like nature of linked data and enables the creation of knowledge networks that span organizational and technical boundaries.

Best practices for creating and managing URIs:

Effective URI design requires careful consideration of naming conventions, persistence, and governance. URIs should be human-readable when possible, follow consistent naming patterns, and remain stable over time. Organizations should establish URI governance policies that address ownership, maintenance, and evolution of URI namespaces.

Common URI patterns include using domain names to establish ownership and authority, incorporating meaningful path structures that reflect organizational hierarchies or data classifications, and avoiding implementation-specific details that might change over time. For example, “http://company.com/products/widget-123” is preferable to “http://company.com/database/table/products/id=123” because it’s more stable and meaningful.

RDF (Resource Description Framework): Describing Relationships

Introduction to RDF triples:

RDF provides the fundamental data model for linked data through the concept of triples—statements consisting of subject, predicate, and object components. This simple yet powerful model enables the representation of any factual statement in a machine-readable format that can be processed, queried, and reasoned about by computer systems.

RDF serialization formats:

RDF data can be serialized in various formats to meet different technical requirements and use cases. Turtle (Terse RDF Triple Language) provides a human-readable format that’s ideal for development and debugging. JSON-LD (JavaScript Object Notation for Linked Data) enables easy integration with web applications and APIs. RDF/XML offers compatibility with XML-based systems and tools.

Each serialization format has specific advantages and use cases. JSON-LD is particularly popular for web applications because it integrates seamlessly with JavaScript and modern web development frameworks. Turtle is preferred for data modeling and ontology development due to its readability and compact syntax. Organizations often support multiple formats to accommodate different technical requirements and integration scenarios.

How RDF enables semantic queries and reasoning:

The structured nature of RDF triples enables sophisticated querying capabilities that go beyond simple data retrieval. Applications can perform semantic queries that discover implicit relationships, infer new knowledge from existing data, and provide intelligent responses to complex questions that span multiple data sources and domains.

SPARQL: Querying Linked Data

Introduction to the SPARQL query language:

SPARQL (SPARQL Protocol and RDF Query Language) serves as the standard query language for linked data, providing capabilities similar to SQL for relational databases but designed specifically for graph-based data models. SPARQL enables users to query across distributed linked data sources, discover relationships, and extract insights from interconnected information networks.

Basic SPARQL queries:

SPARQL queries use pattern matching to identify relevant triples in linked data sources. SELECT queries retrieve specific data elements, WHERE clauses specify patterns to match, and FILTER statements enable conditional logic. For example, a query to find all employees of Acme Corporation might use patterns like “?person worksFor AcmeCorp” to identify relevant relationships.

Advanced SPARQL queries:

Advanced SPARQL capabilities include CONSTRUCT queries that create new RDF graphs from existing data, DESCRIBE queries that retrieve comprehensive information about specific resources, and federated queries that span multiple linked data sources. These capabilities enable sophisticated data integration and analysis scenarios that would be difficult or impossible with traditional query languages.

Linked Data vs. Linked Open Data (LOD): Understanding the Difference

While linked data and Linked Open Data (LOD) are closely related concepts, understanding their differences is crucial for organizations developing data sharing and publication strategies. This distinction impacts licensing, accessibility, and the potential for creating network effects through data interconnection.

Defining Linked Open Data (LOD): Openness and Accessibility

The importance of open licenses: Linked Open Data extends the principles of linked data by adding requirements for open licensing that enables free access, use, and redistribution of data resources. Common open licenses include CC0 (Creative Commons Zero) which places data in the public domain, and CC-BY (Creative Commons Attribution) which requires attribution but otherwise allows unrestricted use.

Open licensing is fundamental to creating network effects where the value of linked data increases as more organizations contribute to shared knowledge networks. Without open licenses, legal restrictions limit the ability to combine, analyze, and build upon linked data resources, reducing their potential impact and utility.

The 5-Star LOD scheme: Tim Berners-Lee’s 5-Star deployment scheme provides a framework for evaluating the quality and openness of linked data publications. One star indicates data available under open licenses in any format. Two stars require machine-readable structured formats. Three stars mandate non-proprietary formats. Four stars require the use of web standards (URIs, RDF). Five stars demand linking to other data sources to provide context and enable discovery.

This rating system helps organizations assess the quality and utility of linked data sources while providing a roadmap for improving their own data publications. Higher-rated data sources typically provide greater value and utility for consumers while contributing more effectively to global knowledge networks.

The Relationship Between Linked Data and LOD: A Subset

All LOD is Linked Data, but not all Linked Data is LOD: This relationship reflects the fact that linked data principles focus on technical implementation and interconnection, while Linked Open Data adds requirements for open licensing and accessibility. Organizations can implement linked data approaches for internal use or controlled sharing without meeting the openness requirements of LOD.

The choice between linked data and Linked Open Data depends on organizational goals, competitive considerations, and regulatory requirements. Many organizations start with internal linked data implementations to gain experience and demonstrate value before considering broader LOD publication strategies.

The benefits of publishing data as LOD: Organizations that publish Linked Open Data often discover unexpected benefits including increased visibility and recognition, opportunities for collaboration and partnership, enhanced data quality through community feedback, and access to complementary data sources published by other organizations.

LOD publication also demonstrates thought leadership and commitment to open innovation, which can enhance organizational reputation and attract talent, partners, and customers who value transparency and collaboration. Additionally, LOD contributions often generate valuable backlinks and citations that improve search engine optimization and online visibility.

Actionable Insight: Organizations should carefully consider their linked data strategy in the context of openness and accessibility requirements. While not all data should be published as LOD due to competitive or privacy concerns, identifying opportunities for LOD publication can generate significant value and contribute to broader innovation ecosystems.

Real-World Use Cases: Linked Data in Action

The true power of linked data becomes apparent when examining real-world implementations across different industries. These use cases demonstrate how organizations are leveraging linked data to solve complex problems, improve operations, and create new value propositions.

Healthcare: Improving Patient Care and Research

Integrating patient records across different systems:

Healthcare organizations face significant challenges in integrating patient information across different electronic health record systems, laboratory databases, imaging systems, and specialty applications. Linked data approaches enable the creation of unified patient views that combine information from multiple sources while preserving data provenance and maintaining privacy protections.

For example, a patient’s linked data profile might connect demographic information from registration systems, diagnostic codes from clinical encounters, laboratory results from testing facilities, prescription information from pharmacy systems, and imaging studies from radiology departments. This comprehensive view enables healthcare providers to make more informed decisions and avoid dangerous drug interactions or duplicate procedures.

Enabling drug discovery and personalized medicine:

Pharmaceutical companies and research institutions are using linked data to connect genomic information, clinical trial data, drug databases, and patient outcomes to accelerate drug discovery and enable personalized treatment approaches. These connections enable researchers to identify patient populations most likely to benefit from specific treatments and discover new therapeutic targets.

Finance: Enhancing Risk Management and Compliance

Detecting fraud and money laundering:

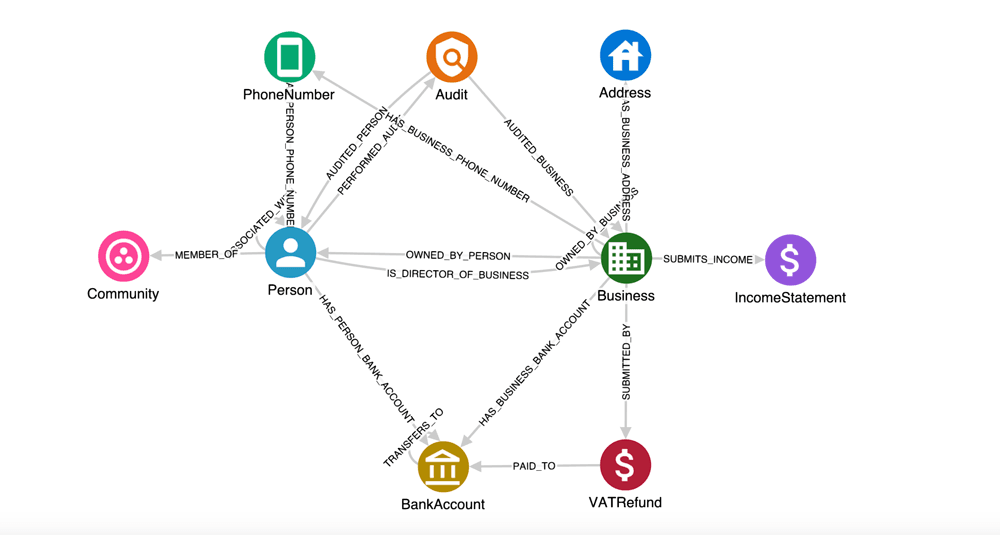

Financial institutions are leveraging linked data to create comprehensive views of customer relationships, transaction patterns, and risk indicators that span multiple accounts, products, and institutions. These connected views enable more effective detection of suspicious activities and fraudulent behavior that might not be apparent when analyzing individual accounts or transactions in isolation.

Linked data approaches enable financial institutions to identify complex fraud schemes involving multiple accounts, shell companies, and coordinated activities across different financial products and services. Machine learning algorithms can analyze these linked data networks to identify unusual patterns and flag potentially fraudulent activities for investigation.

Improving KYC (Know Your Customer) processes:

Know Your Customer regulations require financial institutions to verify customer identities and assess risk levels for regulatory compliance and risk management purposes. Linked data enables institutions to connect customer information with external data sources including regulatory databases, sanctions lists, and beneficial ownership registries to create comprehensive customer risk profiles.

These linked data approaches reduce the manual effort required for KYC compliance while improving accuracy and completeness of customer risk assessments. Institutions can automatically update risk profiles as new information becomes available and identify changes in customer circumstances that require additional review or monitoring.

E-commerce: Powering Product Discovery and Recommendations

Improving search results and product recommendations:

E-commerce platforms are using linked data to create rich product knowledge graphs that connect products with their attributes, categories, brands, customer reviews, and purchasing patterns. These connections enable more accurate search results and personalized recommendations that consider multiple factors including customer preferences, product relationships, and contextual information.

Linked data approaches enable e-commerce platforms to understand semantic relationships between products, such as complementary items, substitutes, and upgrades, which can be used to improve cross-selling and upselling opportunities. Machine learning algorithms can analyze these linked data networks to identify patterns and preferences that inform personalized shopping experiences.

Creating personalized shopping experiences:

By connecting customer data with product information, browsing behavior, and external data sources, e-commerce platforms can create highly personalized shopping experiences that adapt to individual preferences, circumstances, and contexts. These personalized experiences can include customized product catalogs, targeted promotions, and contextual recommendations.

Linked data enables e-commerce platforms to understand customer intent and preferences at a deeper level by analyzing relationships between products, brands, categories, and customer behaviors. This understanding enables more effective personalization that goes beyond simple collaborative filtering to consider semantic relationships and contextual factors.

Example:Amazon’s product knowledge graph connects millions of products with their attributes, customer reviews, purchasing patterns, and relationships to enable sophisticated recommendation algorithms and search capabilities. The system can understand that customers searching for “smartphone accessories” might be interested in cases, chargers, screen protectors, and wireless headphones based on semantic relationships and purchasing patterns.

Publishing: Enhancing Content Discoverability and Reach

Connecting articles, authors, and topics:

Publishing organizations are using linked data to create rich content networks that connect articles with their authors, topics, sources, and related content. These connections enable readers to discover related content, explore author expertise, and understand topical relationships that might not be apparent from traditional categorization approaches.

Linked data approaches enable publishing organizations to create dynamic content relationships that evolve as new content is published and new connections are discovered. These relationships can be used to generate automated content recommendations, create topic-based content collections, and identify emerging trends and themes.

Improving search engine optimization (SEO):

Search engines are increasingly using structured data and linked data principles to understand content meaning and context. Publishing organizations that implement linked data approaches and schema markup can improve their search engine visibility and enable rich snippets and enhanced search results that attract more readers.

Linked data enables search engines to understand relationships between content, authors, and topics, which can improve content ranking and visibility for relevant queries. Publishers can also use linked data to create content syndication and distribution strategies that leverage semantic relationships and automated content discovery.

How to Publish Linked Data: A Step-by-Step Guide

Publishing linked data requires careful planning, technical implementation, and ongoing maintenance. This step-by-step guide provides practical guidance for organizations looking to publish their data as linked data resources that can be discovered, accessed, and utilized by both internal and external users.

Step 1: Identify Your Data Sources

What data do you want to publish as linked data?

The first step in publishing linked data involves identifying which data sources provide the most value for linked data publication. Consider data that has broad utility, connects to external resources, or addresses specific user needs. High-value candidates often include reference data, master data, and datasets that describe entities with broad relevance such as products, people, organizations, or locations.

Organizations should also consider data that can benefit from network effects, where the value increases as more organizations publish related information. Examples include bibliographic data, geographic information, organizational directories, and product catalogs that can be enhanced through connections with external data sources.

Assess the quality and completeness of your data:

Data quality is fundamental to successful linked data publication. Assess your data sources for accuracy, completeness, consistency, and currency. Identify and address data quality issues before publication, as poor quality linked data can undermine user trust and adoption.

Establish data quality metrics and monitoring processes to ensure ongoing data quality. Consider implementing data validation rules, automated quality checks, and feedback mechanisms that enable users to report quality issues and suggest improvements.

Step 2: Choose a Vocabulary or Ontology

Select a vocabulary or ontology that best describes your data:

Choosing appropriate vocabularies and ontologies is crucial for ensuring interoperability and enabling others to understand and utilize your linked data. Start by investigating existing vocabularies that cover your domain, as reusing established vocabularies improves interoperability and reduces implementation effort.

Popular general-purpose vocabularies include Schema.org for web content, Dublin Core for metadata, and FOAF for people and social relationships. Domain-specific vocabularies exist for many industries and applications, such as FIBO for financial services, HL7 FHIR for healthcare, and GoodRelations for e-commerce.

Consider creating your own vocabulary if necessary:

If existing vocabularies don’t adequately cover your data requirements, you may need to create custom vocabulary elements. However, this should be done carefully and sparingly, as custom vocabularies reduce interoperability and require additional documentation and maintenance.

When creating custom vocabulary elements, follow established naming conventions, provide clear definitions and usage guidelines, and consider submitting your vocabulary extensions to relevant standards organizations or community groups for broader adoption.

Step 3: Create URIs for Your Data

Assign unique URIs to each entity in your data:

Develop a consistent URI naming strategy that creates unique, stable identifiers for each entity in your dataset. URI patterns should be meaningful, predictable, and maintainable over time. Consider factors such as organizational structure, data governance, and technical infrastructure when designing URI patterns.

Common URI patterns include hierarchical structures that reflect organizational relationships, opaque identifiers that don’t encode business logic, and hybrid approaches that balance human readability with technical stability. Document your URI patterns and establish governance processes to ensure consistency across different data sources and publication efforts.

Use HTTP URIs so that people can look up those names:

Ensure that your URIs are based on HTTP protocols and can be dereferenced to retrieve information about the identified resources. This requires establishing web infrastructure that can serve linked data content in response to HTTP requests for your URIs.

Implement content negotiation to serve different representations of your linked data based on client preferences. Support multiple serialization formats such as JSON-LD, Turtle, and RDF/XML to accommodate different technical requirements and use cases.

Step 4: Create RDF Triples

Describe the relationships between your entities using RDF triples:

Transform your source data into RDF triples that describe entities and their relationships using the vocabularies and ontologies selected in Step 2. This process often involves data mapping and transformation logic that converts existing data formats into RDF representations.

Pay careful attention to data type handling, language tagging, and relationship modeling to ensure that your RDF accurately represents the meaning and structure of your source data. Consider implementing automated testing and validation processes to ensure that your RDF generation processes produce consistent, high-quality output.

Choose an appropriate RDF serialization format:

Select RDF serialization formats that meet your technical requirements and user needs. JSON-LD is often preferred for web applications and APIs, while Turtle provides better human readability for development and debugging. Consider supporting multiple formats to accommodate different use cases and technical preferences.

Implement proper HTTP headers and content negotiation to enable clients to request their preferred serialization formats. Provide clear documentation about supported formats and how to access them through your linked data endpoints.

Step 5: Publish Your Linked Data

Host your Linked Data on a web server:

Deploy your linked data on reliable web infrastructure that can handle expected traffic loads and provide consistent availability. Implement proper HTTP caching, compression, and performance optimization to ensure good user experience and efficient resource utilization.

Consider implementing SPARQL endpoints that enable users to query your linked data using standard query languages. SPARQL endpoints provide powerful capabilities for data exploration and analysis but require additional infrastructure and security considerations.

Register your Linked Data with a LOD cloud directory:

If you’re publishing Linked Open Data, register your dataset with relevant directories and catalogs such as the LOD Cloud, DataHub, or domain-specific registries. Registration improves discoverability and enables others to find and utilize your linked data resources.

Provide comprehensive metadata about your dataset including descriptions, licenses, update frequencies, and contact information. Consider implementing dataset metadata using vocabularies such as DCAT (Data Catalog Vocabulary) to improve interoperability with catalog systems.

Tools and Technologies for Working with Linked Data: The Ecosystem

The linked data ecosystem includes a rich variety of tools and technologies that support different aspects of linked data creation, management, and utilization. Understanding these tools and their capabilities is essential for organizations planning linked data implementations.

Graph Databases: Storing and Querying Linked Data

Neo4j:

Neo4j is a leading native graph database that provides high-performance storage and querying capabilities for graph-structured data. It offers intuitive query languages (Cypher), visual query interfaces, and extensive APIs for application integration. Neo4j is particularly well-suited for applications that require complex graph traversals and real-time query performance.

RDF Triplestores: Managing RDF Data

Apache Jena: Apache Jena is a comprehensive Java framework for building semantic web and linked data applications. It includes RDF APIs, SPARQL query engines, reasoning capabilities, and tools for working with ontologies. Jena is widely used in academic and research contexts and provides extensive customization capabilities.

RDF4J: RDF4J (formerly Sesame) is a Java framework for processing RDF data that includes parsers, writers, and query engines for working with RDF in various formats. It provides both embedded and server-based deployment options and supports federation across multiple RDF repositories.

Fuseki: Apache Jena Fuseki is a SPARQL server that provides HTTP interfaces for querying and updating RDF data. It can be deployed as a standalone server or embedded within applications and includes web-based administration interfaces for managing datasets and monitoring performance.

Challenges and Limitations of Linked Data: A Balanced Perspective

While linked data offers significant benefits and opportunities, organizations must also understand and address various challenges and limitations to ensure successful implementations. A realistic assessment of these challenges enables better planning and more effective risk mitigation strategies.

Data Quality: Ensuring Accuracy and Completeness

Addressing data inconsistencies and errors: Linked data environments often integrate information from multiple sources with different data quality standards, update frequencies, and validation processes. This diversity can lead to inconsistencies, conflicts, and errors that undermine the reliability and utility of linked data applications.

Data quality challenges are amplified in linked data environments because errors can propagate through link relationships and affect downstream applications and analyses. For example, incorrect entity linking can create false relationships that mislead users and applications, while incomplete data can result in missed connections and opportunities.

Organizations must implement comprehensive data quality management processes that include data profiling, validation rules, conflict resolution procedures, and ongoing monitoring. These processes should address both technical data quality issues (such as format errors and constraint violations) and semantic quality issues (such as incorrect relationships and missing context).

Implementing data validation and cleansing processes: Effective data validation requires both automated and manual processes that can identify and correct data quality issues at multiple stages of the linked data lifecycle. Automated validation can check for technical compliance with schemas and ontologies, while manual review processes can address semantic and contextual quality issues.

Data cleansing processes should be designed to preserve data provenance and maintain audit trails that enable users to understand the quality and reliability of linked data resources. Consider implementing data quality metrics and reporting capabilities that provide transparency about data quality issues and improvement efforts.

Scalability: Handling Large Datasets

Optimizing graph database performance: Graph databases and RDF triplestores face unique performance challenges when handling large datasets with complex relationship structures. Query performance can degrade significantly as dataset sizes increase, particularly for queries that require extensive graph traversals or complex reasoning operations.

Performance optimization strategies include careful index design, query optimization, data partitioning, and caching strategies that reduce query execution times and improve system responsiveness. Organizations should also consider the trade-offs between query flexibility and performance when designing their linked data architectures.

Using distributed computing techniques: Large-scale linked data applications may require distributed computing approaches that can process queries and analyses across multiple servers or cloud resources. Distributed approaches can improve performance and scalability but introduce additional complexity in areas such as data consistency, query coordination, and failure handling.

Consider implementing horizontal scaling strategies that can distribute data and processing loads across multiple nodes while maintaining query performance and data consistency. Cloud-based solutions can provide elastic scaling capabilities that adapt to changing demand patterns and usage requirements.

Interoperability: Connecting Different Datasets

Addressing vocabulary mismatches and semantic conflicts: Different linked data sources often use different vocabularies, ontologies, and modeling approaches that can create interoperability challenges. Even when sources use the same vocabularies, they may interpret and apply them differently, leading to semantic conflicts and integration difficulties.

Vocabulary mapping and alignment techniques can help address these challenges by creating explicit correspondences between different vocabularies and ontologies. However, these mappings require domain expertise and ongoing maintenance to ensure accuracy and currency as vocabularies evolve.

Using data mapping and transformation techniques: Data integration in linked data environments often requires sophisticated mapping and transformation processes that can reconcile different data models, vocabularies, and quality standards. These processes may include entity resolution, schema mapping, and data harmonization techniques.

Consider implementing flexible integration architectures that can accommodate evolving data sources and requirements without requiring extensive system modifications. Virtual integration approaches can provide unified access to heterogeneous data sources while minimizing data replication and synchronization challenges.

Security: Protecting Sensitive Data

Implementing access control and authentication mechanisms: Linked data environments require sophisticated security mechanisms that can control access to specific resources, relationships, and query capabilities while maintaining the flexibility and discoverability that make linked data valuable.

Traditional database security models may not be sufficient for linked data environments where relationships can span multiple datasets and security domains. Organizations need to implement fine-grained access control mechanisms that can protect sensitive information while enabling appropriate data sharing and collaboration.

Using encryption to protect data in transit and at rest: Sensitive linked data requires protection both during transmission over networks and when stored in databases and file systems. Encryption strategies should consider the unique characteristics of linked data, such as the need to preserve queryability and the challenges of encrypting graph-structured data.

Consider implementing privacy-preserving techniques such as differential privacy, data anonymization, and selective disclosure that can protect sensitive information while maintaining the utility and value of linked data resources.

Actionable Insight: Successfully addressing linked data challenges requires comprehensive planning, appropriate technology choices, and ongoing management processes. Organizations should develop realistic expectations about implementation complexity and invest in the necessary skills, tools, and processes to address these challenges effectively. Starting with smaller, less complex implementations can help organizations build experience and capabilities before tackling more challenging linked data scenarios.

The Future of Linked Data: Emerging Trends

The linked data landscape continues to evolve rapidly, driven by advances in artificial intelligence, emerging technologies, and changing business requirements. Understanding these trends helps organizations prepare for future opportunities and challenges in the linked data ecosystem.

Linked Data and AI: Enhancing Machine Learning

Using Linked Data to improve the accuracy and explainability of AI models: Machine learning models often struggle with data integration, feature engineering, and explainability challenges that linked data can help address. Knowledge graphs built from linked data provide rich, structured representations of domain knowledge that can improve model training, validation, and interpretation.

Linked data enables machine learning systems to incorporate external knowledge, understand relationships between entities, and provide more interpretable results by grounding predictions in explicit knowledge representations. This capability is particularly valuable for applications in healthcare, finance, and other domains where explainability and trustworthiness are critical requirements.

Knowledge graph embeddings and graph neural networks represent emerging techniques that can leverage linked data structures to improve machine learning performance across various tasks including entity resolution, link prediction, and recommendation systems. These approaches combine the structured knowledge representation capabilities of linked data with the pattern recognition capabilities of machine learning.

Linked Data and Blockchain: Ensuring Data Integrity

Using blockchain to verify the provenance and authenticity of Linked Data: Blockchain technologies offer potential solutions for ensuring the integrity, provenance, and authenticity of linked data resources. By recording data publication events, updates, and access patterns on immutable ledgers, blockchain can provide verifiable audit trails that enhance trust in linked data ecosystems.

Decentralized identity systems built on blockchain technologies can enable more secure and privacy-preserving approaches to linked data publication and access control. These systems can provide cryptographic proof of data ownership and authorization without requiring centralized authority or revealing sensitive information about data publishers or consumers.

Smart contracts can automate data licensing, usage tracking, and compensation mechanisms for linked data resources, enabling new business models and incentive structures that encourage high-quality data publication and sharing. These mechanisms can help address the sustainability challenges that have limited the growth of linked open data ecosystems.

Conclusion: Embracing the Power of Linked Data

Throughout this comprehensive guide, we’ve explored the transformative potential of linked data across industries, applications, and use cases. From healthcare systems that save lives through better patient data integration to e-commerce platforms that create personalized shopping experiences, linked data is reshaping how organizations manage, integrate, and derive value from their information assets.

The key concepts we’ve covered—from Tim Berners-Lee’s four foundational principles to the technical components of RDF, SPARQL, and semantic vocabularies—provide the building blocks for creating interconnected knowledge networks that transcend traditional data silos. These networks enable unprecedented levels of data discovery, integration, and intelligence that drive competitive advantage and innovation.

The real-world use cases demonstrate that linked data is not a theoretical concept but a practical approach that organizations are using today to solve complex challenges and create new opportunities. Whether improving patient care through integrated health records, detecting financial fraud through connected data analysis, or enhancing content discoverability through semantic relationships, linked data delivers measurable value across diverse scenarios.

However, success with linked data requires careful attention to implementation challenges including data quality, scalability, interoperability, and security. Organizations that address these challenges systematically and invest in appropriate tools, technologies, and processes are positioned to realize the full benefits of linked data approaches.

Looking forward, the convergence of linked data with artificial intelligence, blockchain technologies, and emerging platforms like the metaverse promises even greater opportunities for innovation and value creation. Organizations that build linked data capabilities today will be better positioned to leverage these future opportunities and adapt to evolving technological landscapes.

The time to embrace linked data is now. Start by identifying specific use cases where linked data can address existing challenges or create new opportunities within your organization. Begin with pilot projects that demonstrate value and build organizational capabilities before scaling to broader implementations. Invest in training your teams on linked data principles, technologies, and best practices.

Most importantly, remember that linked data is ultimately about connecting information to create knowledge, and knowledge to drive action. By breaking down data silos and creating interconnected knowledge networks, organizations can unlock insights, improve decision-making, and create value that would be impossible with traditional data management approaches.

The future belongs to organizations that can effectively harness the power of linked data to create intelligent, adaptive, and interconnected systems that drive innovation and competitive advantage. The journey begins with understanding the principles and possibilities we’ve explored in this guide, but it continues with practical implementation, continuous learning, and ongoing adaptation to new opportunities and challenges.

Take the first step today by exploring how linked data can transform your organization’s approach to data management, integration, and utilization. The possibilities are limitless, and the potential for creating value is unprecedented in our increasingly connected and data-driven world.